How to choose and deploy industry-specific AI models

As artificial intelligence becomes more advanced, previously cutting-edge — but generic — AI models are becoming commonplace, such as Google Cloud’s Vision AI or Amazon Rekognition.

While effective in some use cases, these solutions do not suit industry-specific needs right out of the box. Organizations that seek the most accurate results from their AI projects will simply have to turn to industry-specific models.

Any team looking to expand its AI capabilities should first apply its data and use cases to a generic model and assess the results.

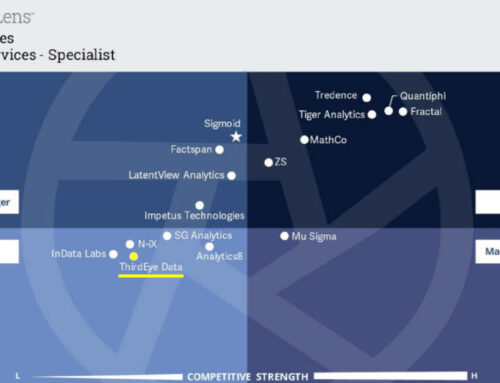

There are a few ways that companies can generate industry-specific results. One would be to adopt a hybrid approach — taking an open-source generic AI model and training it further to align with the business’ specific needs. Companies could also look to third-party vendors, such as IBM or C3, and access a complete solution right off the shelf. Or — if they really needed to — data science teams could build their own models in-house, from scratch.

Let’s dive into each of these approaches and how businesses can decide which one works for their distinct circumstances.

Generic models alone often don’t cut it

Generic AI models like Vision AI or Rekognition and open-source ones from TensorFlow or Scikit-learn often fail to produce sufficient results when it comes to niche use cases in industries like finance or the energy sector. Many businesses have unique needs, and models that don’t have the contextual data of a certain industry will not be able to provide relevant results.

Building on top of open-source models

At ThirdEye Data, we recently worked with a utility company to tag and detect defects in electric poles by using AI to analyze thousands of images. We started off using Google Vision API and found that it was unable to produce our desired results — with the precision and recall values of the AI models completely unusable. The models were unable to read the characters within the tags on the electric poles 90% of the time because it didn’t identify the nonstandard font and varying background colors used in the tags.

So, we took base computer vision models from TensorFlow and optimized them to the utility company’s precise needs. After two months of developing AI models to detect and decipher tags on the electric poles, and another two months of training these models, the results are displaying accuracy levels of over 90%. These will continue to improve over time with retraining iterations.

Any team looking to expand its AI capabilities should first apply its data and use cases to a generic model and assess the results. Open-source algorithms that companies can start off with can be found on AI and ML frameworks like TensorFlow, Scikit-learn or Microsoft Cognitive Toolkit. At ThirdEye Data, we used convolutional neural network (CNN) algorithms on TensorFlow.

Then, if the results are insufficient, the team can extend the algorithm by training it further on their own industry-specific data.