Drill is an Apache open-source SQL query engine for Big Data exploration. Apache Drill is designed from the ground up to support high-performance analysis on the semi-structured and rapidly evolving data coming from modern Big Data applications, while still providing the familiarity and ecosystem of ANSI SQL, the industry-standard query language. Drill provides plug-and-play integration with existing Apache Hive and Apache HBase deployments.

Drill 1.12 provides the following new features and improvements:

ST_AsGeoJSON and ST_AsJSON, that return GeoJSON and JSON representations (DRILL-5962, DRILL-5960)It takes just a few minutes to get started with Drill. Untar the Drill software on your Linux, Mac, or Windows laptop and run a query on a local file. No need to set up any infrastructure or to define schemas. Just point to the data, such as data in a file, directory, HBase table, and drill.

$ tar -xvf apache-drill-.tar.gz

$ /bin/drill-embedded

0: jdbc:drill:zk=local> SELECT * FROM cp.`employee.json` LIMIT 5;

+--------------+----------------------------+---------------------+---------------+--------------+----------------------------+-----------+----------------+-------------+------------------------+----------+----------------+----------------------+-----------------+---------+-----------------------+

| employee_id | full_name | first_name | last_name | position_id | position_title | store_id | department_id | birth_date | hire_date | salary | supervisor_id | education_level | marital_status | gender | management_role |

+--------------+----------------------------+---------------------+---------------+--------------+----------------------------+-----------+----------------+-------------+------------------------+----------+----------------+----------------------+-----------------+---------+-----------------------+

| 1 | Sheri Nowmer | Sheri | Nowmer | 1 | President | 0 | 1 | 1961-08-26 | 1994-12-01 00:00:00.0 | 80000.0 | 0 | Graduate Degree | S | F | Senior Management |

| 2 | Derrick Whelply | Derrick | Whelply | 2 | VP Country Manager | 0 | 1 | 1915-07-03 | 1994-12-01 00:00:00.0 | 40000.0 | 1 | Graduate Degree | M | M | Senior Management |

| 4 | Michael Spence | Michael | Spence | 2 | VP Country Manager | 0 | 1 | 1969-06-20 | 1998-01-01 00:00:00.0 | 40000.0 | 1 | Graduate Degree | S | M | Senior Management |

| 5 | Maya Gutierrez | Maya | Gutierrez | 2 | VP Country Manager | 0 | 1 | 1951-05-10 | 1998-01-01 00:00:00.0 | 35000.0 | 1 | Bachelors Degree | M | F | Senior Management |

Drill is the world’s first and only distributed SQL engine that doesn’t require schemas. It shares the same schema-free JSON model as MongoDB and Elasticsearch. No need to define and maintain schemas or transform data (ETL). Drill automatically understands the structure of the data.

Using Drill’s schema-free JSON model, you can query complex, semi-structured data in situ. No need to flatten or transform the data prior to or during query execution. Drill also provides intuitive extensions to SQL to work with nested data. Here’s a simple query on a JSON file demonstrating how to access nested elements and arrays:

SELECT * FROM (SELECT t.trans_id,

t.trans_info.prod_id[0] AS prod_id,

t.trans_info.purch_flag AS purchased

FROM `clicks/clicks.json` t) sq

WHERE sq.prod_id BETWEEN 700 AND 750 AND

sq.purchased = 'true'

ORDER BY sq.prod_id;

Drill supports the standard SQL:2003 syntax. No need to learn a new “SQL-like” language or struggle with a semi-functional BI tool. Drill supports many data types including DATE, INTERVAL, TIMESTAMP, and VARCHAR, as well as complex query constructs such as correlated sub-queries and joins in WHERE clauses. Here is an example of a TPC-H standard query that runs in Drill:

SELECT o.o_orderpriority, COUNT(*) AS order_count

FROM orders o

WHERE o.o_orderdate >= DATE '1996-10-01'

AND o.o_orderdate < DATE '1996-10-01' + INTERVAL '3' month

AND EXISTS(

SELECT * FROM lineitem l

WHERE l.l_orderkey = o.o_orderkey

AND l.l_commitdate < l.l_receiptdate

)

GROUP BY o.o_orderpriority

ORDER BY o.o_orderpriority;

Drill works with standard BI tools. You can use your existing tools, such as Tableau, MicroStrategy, QlikView and Excel.

Apache Drill lets you leverage your investments in Hive. You can run interactive queries with Drill on your Hive tables and access all Hive input/output formats (including custom SerDes). You can join tables associated with different Hive metastores, and you can join a Hive table with an HBase table or a directory of log files. Here’s a simple query in Drill on a Hive table:

SELECT `month`, state, sum(order_total) AS sales

FROM hive.orders

GROUP BY `month`, state

ORDER BY 3 DESC LIMIT 5;

Drill is extensible. You can connect Drill out-of-the-box to file systems (local or distributed, such as S3 and HDFS), HBase and Hive. You can implement a storage plugin to make Drill work with any other data source. Drill can combine data from multiple data sources on the fly in a single query, with no centralized metadata definitions. Here’s a query that combines data from a Hive table, an HBase table (view) and a JSON file:

SELECT custview.membership, sum(orders.order_total) AS sales

FROM hive.orders, custview, dfs.`clicks/clicks.json` c

WHERE orders.cust_id = custview.cust_id AND orders.cust_id = c.user_info.cust_id

GROUP BY custview.membership

ORDER BY 2;

Drill exposes a simple, high-performance Java API to build custom user-defined functions (UDFs) for adding your own business logic to Drill. Drill also supports Hive UDFs. If you have already built UDFs in Hive, you can reuse them with Drill with no modifications.

Drill is designed from the ground up for high throughput and low latency. It doesn’t use a general purpose execution engine like MapReduce, Tez or Spark. As a result, Drill is flexible (schema-free JSON model) and performant. Drill’s optimizer leverages rule- and cost-based techniques, as well as data locality and operator push-down, which is the capability to push down query fragments into the back-end data sources. Drill also provides a columnar and vectorized execution engine, resulting in higher memory and CPU efficiency.

Drill is available as a simple download you can run on your laptop. When you’re ready to analyze larger datasets, deploy Drill on your Hadoop cluster (up to 1000 commodity servers). Drill leverages the aggregate memory in the cluster to execute queries using an optimistic pipelined model, and automatically spills to disk when the working set doesn’t fit in memory.

Apache Drill is a low latency distributed query engine for large-scale datasets, including structured and semi-structured/nested data. Inspired by Google’s Dremel, Drill is designed to scale to several thousands of nodes and query petabytes of data at interactive speeds that BI/Analytics environments require.

Drill is also useful for short, interactive ad-hoc queries on large-scale data sets. Drill is capable of querying nested data in formats like JSON and Parquet and performing dynamic schema discovery. Drill does not require a centralized metadata repository.

Drill includes a distributed execution environment, purpose built for large- scale data processing. At the core of Apache Drill is the “Drillbit” service, which is responsible for accepting requests from the client, processing the queries, and returning results to the client.

A Drillbit service can be installed and run on all of the required nodes in a Hadoop cluster to form a distributed cluster environment. When a Drillbit runs on each data node in the cluster, Drill can maximize data locality during query execution without moving data over the network or between nodes. Drill uses ZooKeeper to maintain cluster membership and health-check information.

Though Drill works in a Hadoop cluster environment, Drill is not tied to Hadoop and can run in any distributed cluster environment. The only pre-requisite for Drill is Zookeeper.

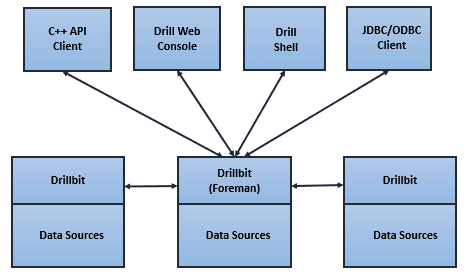

You can access Drill through the following interfaces:

Drill does not require schema or type specification for data in order to start the query execution process. Drill starts data processing in record-batches and discovers the schema during processing. Self-describing data formats such as Parquet, JSON, AVRO, and NoSQL databases have schema specified as part of the data itself, which Drill leverages dynamically at query time. Because the schema can change over the course of a Drill query, many Drill operators are designed to reconfigure themselves when schemas change.

Drill allows access to nested data attributes, as if they were SQL columns, and provides intuitive extensions to easily operate on them. From an architectural point of view, Drill provides a flexible hierarchical columnar data model that can represent complex, highly dynamic and evolving data models. Relational data in Drill is treated as a special or simplified case of complex/multi-structured data.

Drill does not have a centralized metadata requirement. You do not need to create and manage tables and views in a metadata repository, or rely on a database administrator group for such a function. Drill metadata is derived through the storage plugins that correspond to data sources. Storage plugins provide a spectrum of metadata ranging from full metadata (Hive), partial metadata (HBase), or no central metadata (files). De-centralized metadata means that Drill is NOT tied to a single Hive repository. You can query multiple Hive repositories at once and then combine the data with information from HBase tables or with a file in a distributed file system. You can also use SQL DDL statements to create metadata within Drill, which gets organized just like a traditional database. Drill metadata is accessible through the ANSI standard INFORMATION_SCHEMA database.

Drill provides an extensible architecture at all layers, including the storage plugin, query, query optimization/execution, and client API layers. You can customize any layer for the specific needs of an organization or you can extend the layer to a broader array of use cases. Drill uses classpath scanning to find and load plugins, and to add additional storage plugins, functions, and operators with minimal configuration.

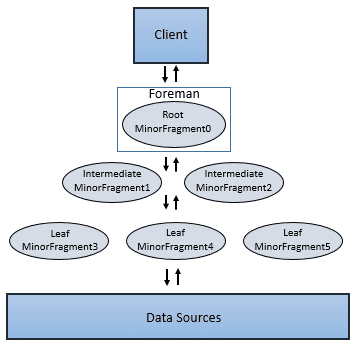

When you submit a Drill query, a client or an application sends the query in the form of an SQL statement to a Drillbit in the Drill cluster. A Drillbit is the process running on each active Drill node that coordinates, plans, and executes queries, as well as distributes query work across the cluster to maximize data locality.

The following image represents the communication between clients, applications, and Drillbits:

apache drill

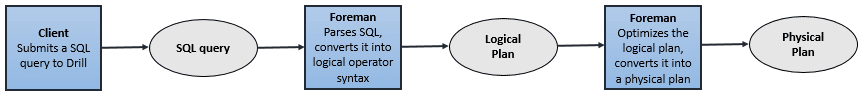

The Drillbit that receives the query from a client or application becomes the Foreman for the query and drives the entire query. A parser in the Foreman parses the SQL, applying custom rules to convert specific SQL operators into a specific logical operator syntax that Drill understands. This collection of logical operators forms a logical plan. The logical plan describes the work required to generate the query results and defines which data sources and operations to apply.

The Foreman sends the logical plan into a cost-based optimizer to optimize the order of SQL operators in a statement and read the logical plan. The optimizer applies various types of rules to rearrange operators and functions into an optimal plan. The optimizer converts the logical plan into a physical plan that describes how to execute the query.

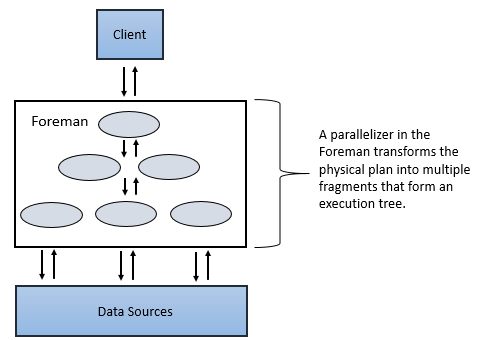

A parallelizer in the Foreman transforms the physical plan into multiple phases, called major and minor fragments. These fragments create a multi-level execution tree that rewrites the query and executes it in parallel against the configured data sources, sending the results back to the client or application.

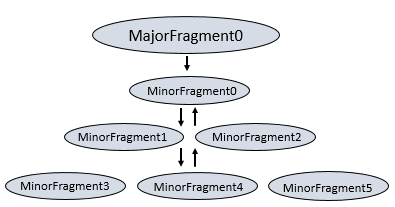

A major fragment is a concept that represents a phase of the query execution. A phase can consist of one or multiple operations that Drill must perform to execute the query. Drill assigns each major fragment a MajorFragmentID.

For example, to perform a hash aggregation of two files, Drill may create a plan with two major phases (major fragments) where the first phase is dedicated to scanning the two files and the second phase is dedicated to the aggregation of the data.

Drill uses an exchange operator to separate major fragments. An exchange is a change in data location and/or parallelization of the physical plan. An exchange is composed of a sender and a receiver to allow data to move between nodes.

Major fragments do not actually perform any query tasks. Each major fragment is divided into one or multiple minor fragments (discussed in the next section) that actually execute the operations required to complete the query and return results back to the client.

You can work with major fragments within the physical plan by capturing a JSON representation of the plan in a file, manually modifying it, and then submitting it back to Drill using the SUBMIT PLAN command. You can also view major fragments in the query profile, which is visible in the Drill Web Console. See EXPLAIN and Query Profiles for more information.

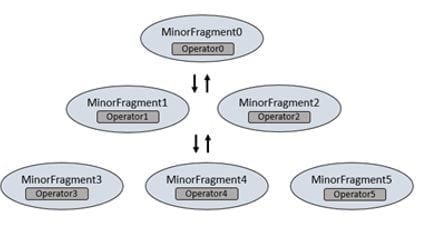

Each major fragment is parallelized into minor fragments. A minor fragment is a logical unit of work that runs inside a thread. A logical unit of work in Drill is also referred to as a slice. The execution plan that Drill creates is composed of minor fragments. Drill assigns each minor fragment a MinorFragmentID.

The parallelizer in the Foreman creates one or more minor fragments from a major fragment at execution time, by breaking a major fragment into as many minor fragments as it can usefully run at the same time on the cluster.

Drill executes each minor fragment in its own thread as quickly as possible based on its upstream data requirements. Drill schedules the minor fragments on nodes with data locality. Otherwise, Drill schedules them in a round-robin fashion on the existing, available Drillbits.

Minor fragments contain one or more relational operators. An operator performs a relational operation, such as scan, filter, join, or group by. Each operator has a particular operator type and an OperatorID. Each OperatorID defines its relationship within the minor fragment to which it belongs. See Physical Operators.

For example, when performing a hash aggregation of two files, Drill breaks the first phase dedicated to scanning into two minor fragments. Each minor fragment contains scan operators that scan the files. Drill breaks the second phase dedicated to aggregation into four minor fragments. Each of the four minor fragments contain hash aggregate operators that perform the hash aggregation operations on the data.

You cannot modify the number of minor fragments within the execution plan. However, you can view the query profile in the Drill Web Console and modify some configuration options that change the behavior of minor fragments, such as the maximum number of slices. See Configuration Options.

Minor fragments can run as root, intermediate, or leaf fragments. An execution tree contains only one root fragment. The coordinates of the execution tree are numbered from the root, with the root being zero. Data flows downstream from the leaf fragments to the root fragment.

The root fragment runs in the Foreman and receives incoming queries, reads metadata from tables, rewrites the queries and routes them to the next level in the serving tree. The other fragments become intermediate or leaf fragments.

Intermediate fragments start work when data is available or fed to them from other fragments. They perform operations on the data and then send the data downstream. They also pass the aggregated results to the root fragment, which performs further aggregation and provides the query results to the client or application.

The leaf fragments scan tables in parallel and communicate with the storage layer or access data on local disk. The leaf fragments pass partial results to the intermediate fragments, which perform parallel operations on intermediate results.

Drill only plans queries that have concurrent running fragments. For example, if 20 available slices exist in the cluster, Drill plans a query that runs no more than 20 minor fragments in a particular major fragment. Drill is optimistic and assumes that it can complete all of the work in parallel. All minor fragments for a particular major fragment start at the same time based on their upstream data dependency.

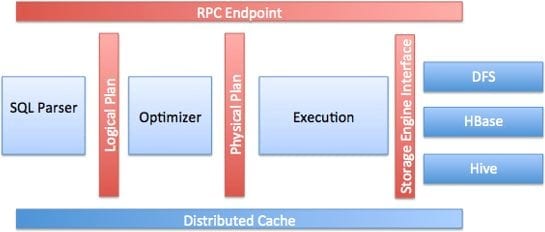

The following image represents components within each Drillbit:

The following list describes the key components of a Drillbit:

In the context of Hadoop, Drill provides storage plugins for distributed files and HBase. Drill also integrates with Hive using a storage plugin.

When users query files and HBase with Drill, they can do it directly or go through Hive if they have metadata defined there. Drill integration with Hive is only for metadata. Drill does not invoke the Hive execution engine for any requests.

Drill is designed from the ground up for high performance on large datasets. The following core elements of Drill processing are responsible for Drill’s performance:

Distributed engine

Drill provides a powerful distributed execution engine for processing queries. Users can submit requests to any node in the cluster. You can add new nodes to the cluster to scale for larger volumes of data to support more users or improve performance.

Columnar execution

Drill optimizes for both columnar storage and execution by using an in-memory data model that is hierarchical and columnar. When working with data stored in columnar formats such as Parquet, Drill avoids disk access for columns that are not involved in a query. Drill’s execution layer also performs SQL processing directly on columnar data without row materialization. The combination of optimizations for columnar storage and direct columnar execution significantly lowers memory footprints and provides faster execution of BI and analytic types of workloads.

Vectorization

Rather than operating on single values from a single table record at one time, vectorization in Drill allows the CPU to operate on vectors, referred to as a record batches. A record batch has arrays of values from many different records. The technical basis for efficiency of vectorized processing is modern chip technology with deep-pipelined CPU designs. Keeping all pipelines full to achieve efficiency near peak performance is impossible to achieve in traditional database engines, primarily due to code complexity.

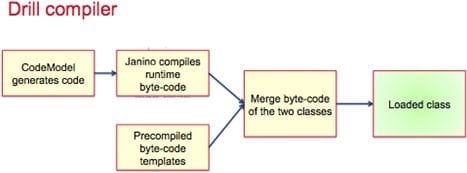

Runtime compilation

Runtime compilation enables faster execution than interpreted execution. Drill generates highly efficient custom code for every single query. The following image shows the Drill compilation/code generation process:

Optimistic and pipelined query execution

Using an optimistic execution model to process queries, Drill assumes that failures are infrequent within the short span of a query. Drill does not spend time creating boundaries or checkpoints to minimize recovery time. In the instance of a single query failure, the query is rerun. Drill execution uses a pipeline model where all tasks are scheduled at once. The query execution happens in- memory as much as possible to move data through task pipelines, persisting to disk only if there is memory overflow.