AI – Past, Present, and Future

AI has gone through many cycles of ups and downs. There has been stunning progress as well as a disappointing slump. Fueled by Machine Learning and specifically, Deep Learning there has lot of progress in the last 15 years. But still, we are not anywhere near Artificial General Intelligence. It remains elusive. Here is a survey. The focus is the breadth and not depth. For a deeper study of any particular topic, the citations provided can be followed.

Some AI Quotes

“The development of full artificial intelligence could spell the end of the human race….It would take off on its own, and re-design itself at an ever-increasing rate. Humans, who are limited by slow biological evolution, couldn’t compete, and would be superseded.” –Stephen Hawking

“Nobody phrases it this way, but I think that artificial intelligence is almost a humanities discipline. It’s really an attempt to understand human intelligence and human cognition.” – Sebastian Thrun

“It seems probable that once the machine thinking method had started, it would not take long to outstrip our feeble powers… They would be able to converse with each other to sharpen their wits. At some stage, therefore, we should have to expect the machines to take control.” – Alan Turing

“Machine intelligence is the last invention that humanity will ever need to make.” – Nick Bostrom

“People worry that computers will get too smart and take over the world, but the real problem is that they’re too stupid and they’ve already taken over the world.” – Pedro Domingos

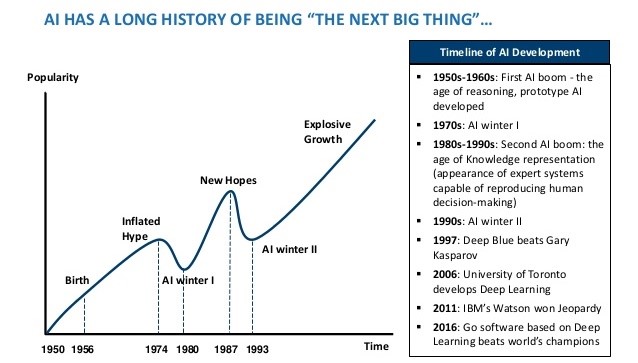

Evolution of AI

The First AI boom was in the sixties and seventies based on logic and intelligent search. An AI winter followed the first boom.

The second boom was in the nineties, driven by knowledge representation and expert systems. Until the second boom, the deductive inference was the primary engine for AI.

Currently, we are in the third boom, fueled by Deep Learning (DL). It started in the first decade of this century. The future is uncertain. Optimists think it’s an eternal AI spring. Others think Deep Learning is hitting the wall and another AI winter is around the corner.

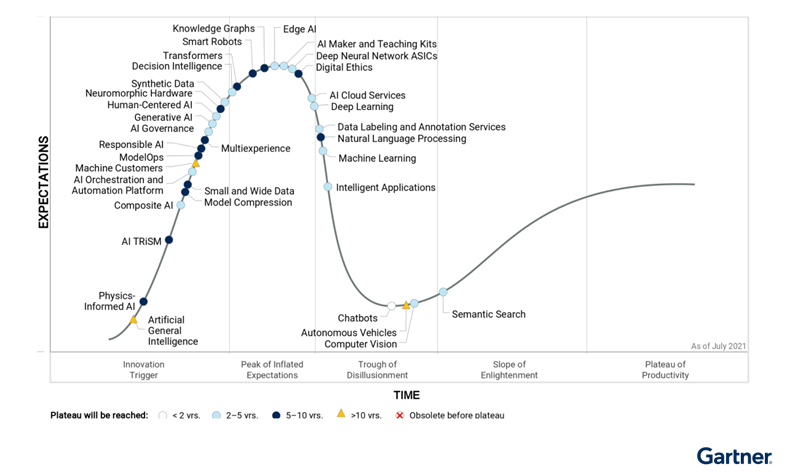

Current AI Hype Cycle

Gartner defines 5 phases in the evolution of any Technology, namely:

- Innovation trigger

- Peak of inflated expectations

- Trough of disillusionment

- Slope of enlightenment

- Plateau of productivity

It’s interesting that only Semantic Search has graduated from the Trough of Disillusionment to the Slope of Enlightenment. Fully Self Driving (FSD) cars and Chatbot are at the Trough of Disillusionment.

AI Impact on Industry

According to this Forbes article, these are the five industries where AI is making a significant impact:

- Energy

- Healthcare

- Aerospace

- Supply Chain

- Construction

The Top Five Sectors Where AI Is Poised To Make Huge Industrial Impacts In 2022

AI Adoption

- Only 23% of businesses have incorporated AI into processes and product/service offerings today

- The largest companies (those with at least 100,000 employees) are the most likely to have an AI strategy, but only half have one

- 47% of digitally mature organizations say they have a defined AI strategy

- 63% of businesses say pressure to reduce costs will require them to use AI

- 54% of executives say AI solutions implemented in their businesses have already increased productivity

The link below has many interesting statistics on AI adoption and the marketplace:

Statistics About Artificial Intelligence

Intelligence

Before we discuss AI, we need to define intelligence. Various experts have defined intelligence. All definitions are subjective and nebulous. Here are some examples.

“Intelligence is a very general mental capability that, among other things, involves the ability to reason, plan, solve problems, think abstractly, comprehend complex ideas, learn quickly and learn from experience.” 52 AI experts

“Any system …that generates adaptive behavior to meet goals in a range of environments can be said to be intelligent.” D. Fogel

“Intelligence is the ability of an information processing agent to adapt to its environment with insufficient knowledge and resources.” P. Wang

The key attributes of intelligence are adaptive, the ability to learn from experience, and the ability to perform novel tasks.

Modern AI solutions don’t have anything comparable to human intelligence. They don’t have any of the attributes of intelligence. This is known as Artificial Narrow Intelligence(ANI) or weak AI. Narrow AI models can be trained for specific tasks e.g., object detection from an image. All existing AI solutions are cases of ANI.

For an AI model to have human-like intelligence, it needs what is known as Artificial General Intelligence (AGI) or strong AI. No such model with AGI exists today. It’s not expected in the foreseeable future. True Fully Self Driving (FSD) car is an example of AGI.

Universal Intelligence: A Definition of Machine Intelligence

AI Task Categories

Francois Chollet, creator of Deep Learning Library Keras and a well-known AI expert has an interesting way of categorizing AI tasks and solutions.

With Automation, tasks usually performed by humans are automated by AI. The solutions are based on ANI. Most current AI solutions belong to this category.

With Assistive solutions, AI extends our abilities to perform certain tasks. There are few cases of this kind of application. For example, using object segmentation and detection by Deep Learning to help a Radiologist make decisions.

Under the Autonomous category are AI agents that exist and thrive independently, performing diverse sets of tasks. AGI is a prerequisite for this. This is nonexistent currently. A robot that can operate in any environment is an example of autonomous AI

AI is cognitive automation, not cognitive autonomy

AI Solution Categories

There are 3 kinds of AI solutions, inferential, pattern mining, and generative. With Inference conclusion is generated from given facts. Most AI problems belong to this category.

Some AI solutions extract Patterns from data. They are essentially pattern matches. Generative AI is used to generate new data, e.g, text, image. Lately, there has been an explosion of various generative models. It could be text-to-text like ChatGPT. It could also be text to image e.g DALL-E.

Inferential AI Techniques

There are three kinds of inferencing techniques, deductive, inductive, and abductive.

In Deductive inference, we deduce new information or conclusion from known facts and rules. It’s a top-down approach based on existing knowledge about the world. There is no learning involved here, unlike inductive inference.

In inductive inference, we arrive at a conclusion by the process of generalization using specific facts or data. It’s a bottom-up approach. All Machine Learning solutions belong to this category. There are two steps: First, learning from examples to train a model. Second, using the model to make an inference.

With abduction, we generate a hypothesis to explain given facts, often incomplete. It’s also known as inferencing to the best explanation. It’s not strictly a deduction, and there is not enough evidence for an induction, either. It enables humans to perform novel tasks. So far, there is no AI solution for abductive inference.

Difference between Inductive and Deductive reasoning

Machine Learning Technique

Machine Learning is inductive. It’s data-driven and bottom-up. Here are the different Machine Learning techniques

In Supervised Learning, human-provided labels are used to train a model. It performs inference. However, prior to inference, a model needs to be trained.

In Unsupervised Learning, we discover patterns from data. It performs pattern discovery. No labeling of data is needed

Reinforcement Learning is used for making decisions under uncertain conditions. The system makes decisions based on current. A reward is obtained with some delay, which gets used to training the model.

Self Supervised Learning is similar to supervised learning except for that part of the data is used as the target for the model to train. Explicit human labeling is not necessary.

In Generative Modeling, new data is generated based on learned statistical distribution of the data-generating process

Other Machine Learning Techniques

Anomaly Detection is unsupervised learning to detect abnormal patterns in data. Generally, unsupervised learning is used for discovering normal patterns in data e.g, clustering.

Time Series Forecasting is essentially a prediction problem where the next value in a sequence is predicted based on past data.

Machine Learning Data Types

Tabular data is essentially set data. It’s the most pervasive data type for ML applications

Two Dimensional data with spatial correlation e.g, image. There could be a temporal correlation also, e.g. video. Requires special ML modeling techniques e.g, Convolution Neural Network (CNN)

Sequential data with autoregression e.g, time series, text. Requires special ML modeling techniques e.g Auto-Regressive Integrated Moving Average (ARIMA), Recurrent Neural Network (RNN)

Machine Learning Model Life Cycle

- Collect data and perform exploratory analysis

- Prepare training data

- Label data (for predictive models only)

- Train model (for predictive models only)

- Test and validate the model

- Deploy model

- Track model performance for data drift

- If necessary, retrain the model and start over

Deep Learning

The current AI boom for the last 15 years is driven by Deep Learning (DL). DL models have network layers performing affine transformation followed by transformation by non-linear activation functions.

DL models are known as universal function approximators. Any complex function can be approximated with enough layers and nodes per layer.

DL models can be supervised for inferencing and unsupervised for pattern extraction and generative. They excel in image, video, audio, and text data.

Deep Learning Networks

Feed Forward Network (FFN). This is the simplest network. They are also used as part of other complex networks.

Convolution Neural Network (CNN). Mostly used for image data. Architecture inspired the human vision system.

Recurrent Neural Network (RNN). Used auto-regressive sequence data. This is the first-generation network for autoregressive data.

Long Term Short Term Network (LSTM). The next-generation network for autoregressive data.

Transformer is the latest and greatest network for autoregressive data. All modern Large Language Models (LLM) are powered by Transformer. It’s been successfully used for vision problems also.

Auto Encoder is an unsupervised model. It finds a lower dimensional representation of data. It can be used as a generative model also.

Variational Auto Encoder (VAE). Better than Auto Encoder. Learns underlying distribution of data. Another generative model

Generative Adversarial Network (GAN) Another generative model that came after VAE. Uses 2 networks, one discriminative and one generative.

Diffusion Model is the latest generation generative model. Used in recent text to image generative model DALL-E.

Deep Learning Language Models

The list is not exhaustive. Please refer to the citation below for more.

BERT : A pre-trained for language based on Transformer. Contains encoder only

GPT : A pre-trained for language based on Transformer. Contains a decoder only. Does next word prediction. Generative model, generating text in response to prompt.

ChatGPT : Conversational bot based on GPT. Additional training with Reinforcement Learning using human-provided feedback for the chatbot response.

Leading Language Models For NLP

Deep Learning Vision Models

The list is not exhaustive. Please refer to the citation below for more.

AlexNet : A pre-trained for vision based on CNN. It contains five convolution layers and three fully connected layers.

VGGNet : A pre-trained for vision based on CNN. It contains 16 layers, including convolution, pooling, and fully connected layers

ResNet : A pre-trained for vision based on CNN.. It contains convolution, pooling, and fully connected layers. Additionally uses skip connection.

Mask R-CNN : A pre-trained for vision based on R-CNN for object detection in an image. Generates bounding box and segmentation mask for an object.

VGG-Face : A pre-trained, vision specifically pre-trained for face recognition. Based on VGG

DeepLabV3 : A pre-trained for vision based on CNN for image segmentation. In segmentation, a label is assigned each pixel.

Deep Learning Cross Modal Models

The list is not exhaustive. Please refer to the citation below for more.

DALL-E : A pre-trained, generative text-to-image model based on Transformer and Discrete Variational Autoencoder (dVAE). Given a text prompt it generates an image.

Glide : A pre-trained, generative text-to-image or image-to-image model based on diffusion.

CLIP : A pre-trained, generative, image-to-text or captioning model. Image encoded with ResNet or Vision Transformer. Text encoded with Transformer

Cross Modal Deep Learning Models

Pretraining and Transfer Learning

Vision and language models are very expensive to train and only few large companies can afford the cost.

Models are pre trained and re purposed for other similar tasks. Sometimes no additional training or fine tuning is necessary.

Generally fine tuning with task specific data is necessary for better performance. Some layers towards the end of the network are unfrozen and weights re-learnt with additional domain specific task

Introduction to Transfer Learning

Deep Learning Drawbacks

Deep learning models are underspecified i.e the model can choose any function with a function space to fit the input and output. There are many such functions that fit the input and output. You have no control over what features will be picked during aka spurious correlation.

Model performance may drop significantly with slight perturbation of data aka adversarial samples. This makes the model very brittle

AI Fallacy

“If the human brain were so simple that we could understand it, we would be so simple that we couldn’t.”

Emerson Pugh

Artificial General Intelligence

All existing AI solutions are really examples of Artificial Narrow Intelligence (ANI). AGI solutions should have intelligence as defined earlier. So far AGI seems like science fiction. There are some initiatives to move beyond ANI.

To achieve AGI, the following human cognitive functions need to be understood and replicated. For a certain class of problems, not all may be required:

- Perception

- Motor control

- Memory

- Reasoning

- Attention

- Language

- Thinking

- Decision-making

- Consciousness

AGI is truly multidisciplinary, spanning many fields, some of which are outside science and engineering. Any successful AGI initiative will require collaboration between these diverse sets of fields.

- Computer Science

- Math and Statistics

- Philosophy

- Cognitive Psychology

- Cognitive NeuroScience

Artificial General Intelligence Approaches

There are various approaches towards achieving AGI. In these areas, progress has been very limited so far.

In Human Brain Emulation, the brain functions are simulated computer hardware and software. The key drawback with development in Human Brain Emulation, however is that it requires the development of in vivo technology to scan the section of the brain in question.

Algorithmic Probability involves the prediction of the environment around the agent using Solomonoff’s induction(2010) and the Bayes theorem, and then, based on the prediction, the agent will choose the action which will maximize future reward upto a certain point, usually taken as the lifetime of the agent. Such a system allows the agent to make the best decision possible in any given situation, without prior knowledge of the environment around it.

The idea of an Integrative Cognitive Architecture is to identify the central cognitive processes that occur within the human brain and then replicate them individually within an AGI. A cognitive architecture is a theory about how the human mind is structured.

It consists of a mix of both symbolic and subsymbolic architectures, both of which would supplement each other. This combination allows the symbolic agents to manage reasoning and understanding while the subsymbolic agents would be responsible for learning. This general approach is called Neuro Sybolic AI and is considered to be the most promising.

Approaches to Artificial General Intelligence

AI journey

AI will keep impacting our life, industry and the economy in significant ways. Not one has the crystal ball about the future of AI